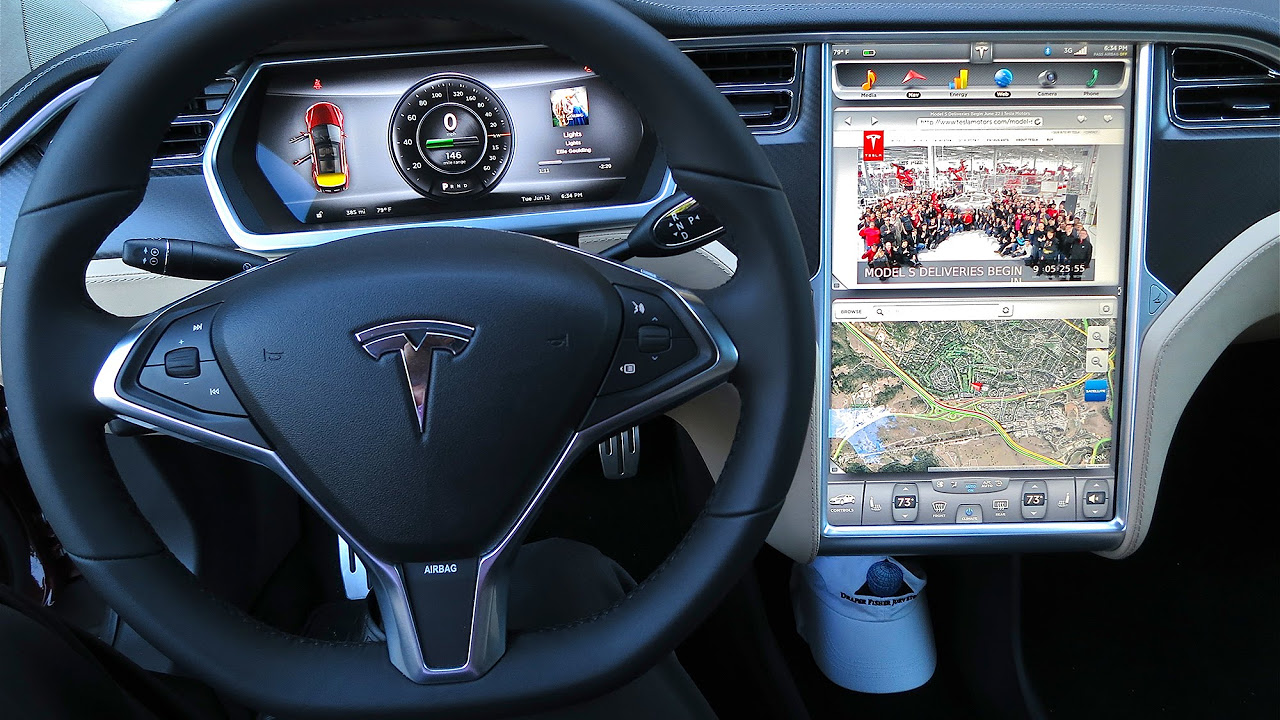

Tesla Autopilot is a high level driver-help framework (ADAS) created by Tesla that adds up to fractional vehicle robotization (Level 2 computerization, as characterized by SAE Global). Tesla gives "Base Autopilot" on all vehicles, which incorporates path focusing and traffic-mindful journey control.

Proprietors might buy or buy into Full Self-Driving (FSD) which adds semi-independent route that answers traffic signals and stop signs, path change help, self-leaving, and the capacity to bring the vehicle from a carport or parking space. Tesla Autopilot for Disabled Drivers in Texas.

The organization's expressed purpose is to offer completely independent driving (SAE Level 5) at a future time, recognizing that specialized and administrative obstacles should be defeated to accomplish this objective. The names Autopilot and Full Self-Driving are disputable, on the grounds that vehicles stay at Level 2 mechanization and are in this way not "completely self-driving" and require dynamic driver oversight.

Tesla Autopilot for Disabled Drivers in Texas

The organization asserts the highlights diminish mishaps brought about by driver carelessness and weakness from long haul driving. Impacts and passings including Tesla vehicles with Autopilot drew in stand out of the press and government organizations. Industry eyewitnesses and scholastics have scrutinized Tesla's choice to utilize undeveloped purchasers to approve beta elements as risky and reckless.

In spite of being explored by the Public Thruway Traffic Security Organization (NHTSA) over its questionable semi-independent drive mode, Autopilot, Tesla hasn't confronted any significant outcomes. Tesla models new and old keep on meandering city roads and highways with innovation that while in fact a SAE Level 2 semi-independent drive mode can be abused as a completely independent framework.

Read Also: If You Body Swap a Tesla While Autopilot Still Work

Consequently the contention over the name Autopilot, and among the purposes behind the heap examinations concerning Tesla by the national government as well as media sources. The latest examination by the Money Road Diary endeavors to recognize why a few Teslas have crashed. Tesla Autopilot for Disabled Drivers in Texas.

The WSJ's approximately 11-minute video, which requires a membership to see, is the second in a series that puts Tesla's Autopilot framework under the magnifying lens. It interfaces the reason for certain collides with Autopilot's overreliance on PC vision, which is essentially an approach to training PCs to comprehend data in light of computerized data sources like video.

1000 Tesla Crashes Reported to NHTSA

Automakers in the U.S. have needed to report all serious certifiable accidents including SAE Level 2 or higher mechanized driving frameworks since NHTSA gave a General Request on crash detailing in June 2021.

Tesla has apparently submitted more than 1000 collides with NHTSA starting around 2016, however the WSJ asserts the majority of that information is stowed away from the public since Tesla thinks of it as restrictive. Nonetheless, the media source says it worked around that by social affair reports from individual states and cross-referring to them with crash information that Tesla submitted to NHTSA.

Among the 222 accidents the WSJ says it sorted out for this report, the paper said 44 happened when a Tesla with Autopilot initiated unexpectedly gone, while one more 31 crashes supposedly happened when Autopilot neglected to yield or stop for an obstruction.

Episodes where the Tesla neglected to stop are said to bring about the most serious wounds or demise. The WSJ had specialists investigate one deadly mishap where Autopilot didn't perceive a toppled truck on the thruway and the vehicle collided with it.

Read Also: Tesla Cybertruck F150 Wrap 2024: What You Know?

That is the very thing a few specialists who were consulted by the Diary said is proof of Autopilot's gravest imperfection. Not at all like a few different automakers that have radar PC vision and lidar laser imaging to identify objects.

Tesla predominantly depends on camera-based PC vision with radar as a reinforcement on certain models. John Bernal, who was terminated from Tesla in 2022 for presenting recordings of Autopilot falling flat.

Tesla Autopilot for Disabled Drivers in Texas. To lets the WSJ know that he has found that the cameras utilized on some Tesla models are not adjusted as expected. He says that when the cameras don't see exactly the same thing, they can have issues recognizing impediments. Also, as the examination recommends, Tesla's overreliance on cameras to control Autopilot can prompt accidents.

No comments